Introduction

Artificial Intelligence (AI) and Machine Learning (ML) are two of the most transformative technologies of the 21st century. They are revolutionizing industries, driving innovation, and shaping the future in ways that were once the stuff of science fiction. From voice-activated personal assistants like Siri and Alexa to recommendation engines on Netflix and Amazon, AI and ML are embedded in our daily lives, often in ways we don’t even realize.

But what exactly are AI and ML? How do they work? And what implications do they have for the future? This post aims to provide a comprehensive understanding of these technologies, their underlying principles, applications, and the challenges and ethical considerations that accompany them.

What is Artificial Intelligence?

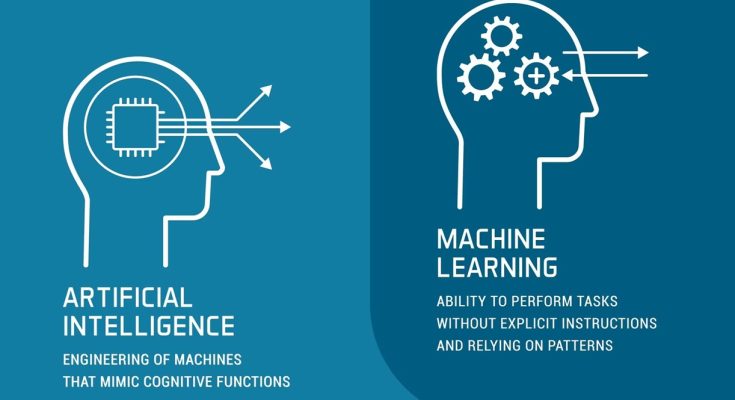

Artificial Intelligence is a branch of computer science that aims to create machines capable of performing tasks that would normally require human intelligence. These tasks include reasoning, learning, problem-solving, perception, and understanding natural language. AI is broadly categorized into two types: Narrow AI and General AI.

- Narrow AI: Also known as Weak AI, Narrow AI is designed to perform a specific task, such as facial recognition, internet searches, or driving a car. Most of the AI systems we interact with today are examples of Narrow AI. They are highly specialized and excel at their designated tasks, but they cannot perform tasks outside of their predefined scope.

- General AI: Also known as Strong AI or AGI (Artificial General Intelligence), General AI refers to a system with the ability to understand, learn, and apply knowledge across a wide range of tasks, much like a human being. General AI remains a theoretical concept, and while it is a major goal of AI research, it has not yet been realized.

The Evolution of AI

AI’s history can be traced back to ancient times when philosophers dreamed of automating human thinking. However, the formal development of AI began in the mid-20th century. Here’s a brief overview of AI’s evolution:

- 1950s-1960s: The term “Artificial Intelligence” was coined by John McCarthy in 1956 at the Dartmouth Conference, which is considered the birth of AI as an academic discipline. Early AI research focused on symbolic methods and problem-solving.

- 1970s-1980s: The field saw the development of expert systems, which were designed to mimic the decision-making abilities of a human expert. However, progress was slow due to limitations in computing power and data availability, leading to the “AI winter,” a period of reduced funding and interest in AI research.

- 1990s-Present: The advent of the internet, big data, and advances in machine learning, particularly deep learning, led to a resurgence in AI research and applications. Breakthroughs such as IBM’s Deep Blue defeating chess champion Garry Kasparov in 1997 and Google DeepMind’s AlphaGo beating Go champion Lee Sedol in 2016 have demonstrated AI’s growing capabilities.

What is Machine Learning?

Machine Learning is a subset of AI that focuses on the development of algorithms that enable computers to learn from and make decisions based on data. Unlike traditional programming, where a computer is explicitly programmed to perform a task, machine learning involves training a model on a dataset to learn patterns and make predictions or decisions without being explicitly programmed to do so.

Types of Machine Learning

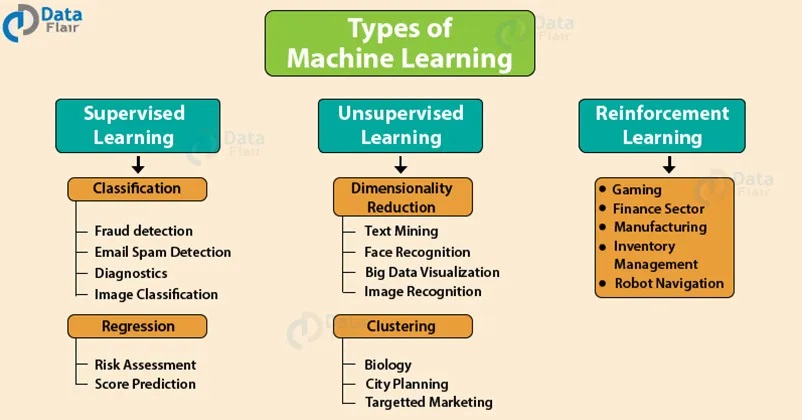

- Supervised Learning: In supervised learning, the model is trained on a labeled dataset, meaning that each training example is paired with an output label. The model learns to map inputs to outputs by minimizing the error between its predictions and the actual labels. Common applications include classification (e.g., spam detection in emails) and regression (e.g., predicting house prices).

- Unsupervised Learning: In unsupervised learning, the model is trained on an unlabeled dataset, meaning the model tries to find hidden patterns or intrinsic structures in the data. Common techniques include clustering (e.g., customer segmentation) and dimensionality reduction (e.g., PCA for data visualization).

- Reinforcement Learning: Reinforcement learning involves training an agent to make a sequence of decisions by rewarding or penalizing it based on the actions it takes. The goal is to learn a strategy, or policy, that maximizes the cumulative reward. This approach is widely used in game AI and robotics.

Key Concepts in Machine Learning

- Training Data: The dataset used to train the model. It consists of input-output pairs for supervised learning and only inputs for unsupervised learning.

- Model: The mathematical representation or algorithm that processes the input data to make predictions.

- Features: The individual measurable properties or characteristics of the data used by the model to make predictions.

- Labels: The actual outcomes or classifications used in supervised learning to train the model.

- Overfitting and Underfitting: Overfitting occurs when the model learns the training data too well, including the noise, resulting in poor generalization to new data. Underfitting occurs when the model is too simple to capture the underlying structure of the data, leading to poor performance even on the training data.

Applications of AI and ML

AI and ML are being applied across a wide range of industries, driving innovation and efficiency. Some notable applications include:

- Healthcare: AI and ML are used for medical imaging analysis, drug discovery, personalized medicine, and predictive analytics. For example, AI algorithms can analyze medical images to detect diseases such as cancer with high accuracy.

- Finance: In finance, AI and ML are used for fraud detection, algorithmic trading, risk management, and customer service. Chatbots powered by AI provide 24/7 customer support, while ML models predict market trends.

- Retail: Retailers use AI and ML for personalized recommendations, inventory management, and optimizing supply chains. Recommendation engines on platforms like Amazon and Netflix analyze user behavior to suggest products or content.

- Autonomous Vehicles: AI and ML are at the core of self-driving cars, enabling them to perceive their environment, make decisions, and navigate safely. Companies like Tesla and Waymo are leading the way in developing autonomous vehicles.

- Natural Language Processing (NLP): NLP, a branch of AI, focuses on the interaction between computers and human language. Applications include voice assistants (e.g., Siri, Alexa), language translation, and sentiment analysis.

- Manufacturing: AI and ML are used in predictive maintenance, quality control, and supply chain optimization in manufacturing industries. Robots and automated systems improve efficiency and reduce human error.

Challenges and Ethical Considerations

Despite the significant advancements and potential of AI and ML, there are several challenges and ethical considerations to address:

- Bias and Fairness: AI and ML models can inherit biases present in the training data, leading to unfair or discriminatory outcomes. Ensuring fairness and transparency in AI systems is a critical challenge.

- Privacy and Security: The use of AI and ML often involves processing large amounts of personal data, raising concerns about privacy and data security. It is essential to develop robust frameworks to protect individuals’ data.

- Job Displacement: As AI and ML automate tasks traditionally performed by humans, there is a growing concern about job displacement and the need for reskilling the workforce.

- Explainability: Many AI models, particularly deep learning models, are considered “black boxes” because they provide little insight into how decisions are made. Developing explainable AI systems is important for trust and accountability.

- Ethical AI: As AI systems become more autonomous, there is a need to ensure they act ethically and in accordance with human values. This includes developing AI that respects human rights and does not cause harm.

The Future of AI and ML

The future of AI and ML is promising, with advancements expected in several areas:

- AI for Good: AI will increasingly be used to tackle global challenges such as climate change, healthcare access, and poverty. AI-powered tools can help optimize resource use, improve healthcare delivery, and enhance education.

- Explainable AI: There will be a growing emphasis on developing AI systems that are transparent and explainable, helping to build trust and accountability.

- AI and Human Collaboration: Rather than replacing humans, AI is expected to complement human abilities, leading to new forms of collaboration between humans and machines.

- AI Regulation: As AI systems become more pervasive, governments and organizations will likely implement regulations to ensure responsible and ethical use of AI.

Conclusion

Artificial Intelligence and Machine Learning are reshaping the world, offering unprecedented opportunities for innovation and growth. However, with these opportunities come significant challenges and responsibilities. Understanding AI and ML is essential for navigating this rapidly evolving landscape and ensuring that these technologies are developed and used in ways that benefit society as a whole.

As AI and ML continue to advance, it is crucial for individuals, organizations, and governments to stay informed and engaged, fostering a future where technology serves humanity’s best interests. Whether you are a tech enthusiast, a business leader, or simply curious about the future, understanding AI and ML is key to unlocking the potential of these transformative technologies.